SEO ensures that Google can crawl your website and index all your primary pages. Google is sometimes picky about what pages on a site it will index. Google console error down your traffic you know better about this if traffic is down what you feel.

Reason For this Error

- You Change Your Hosting

- You Change Your Hosting Not Check Proper Nameserver

- If you use Cloudflare Not Remove Old Site Data.

- In Robot.txt you Disallow Google bot

Couldn’t Fetch Sitemap error On Google Search Console Solved

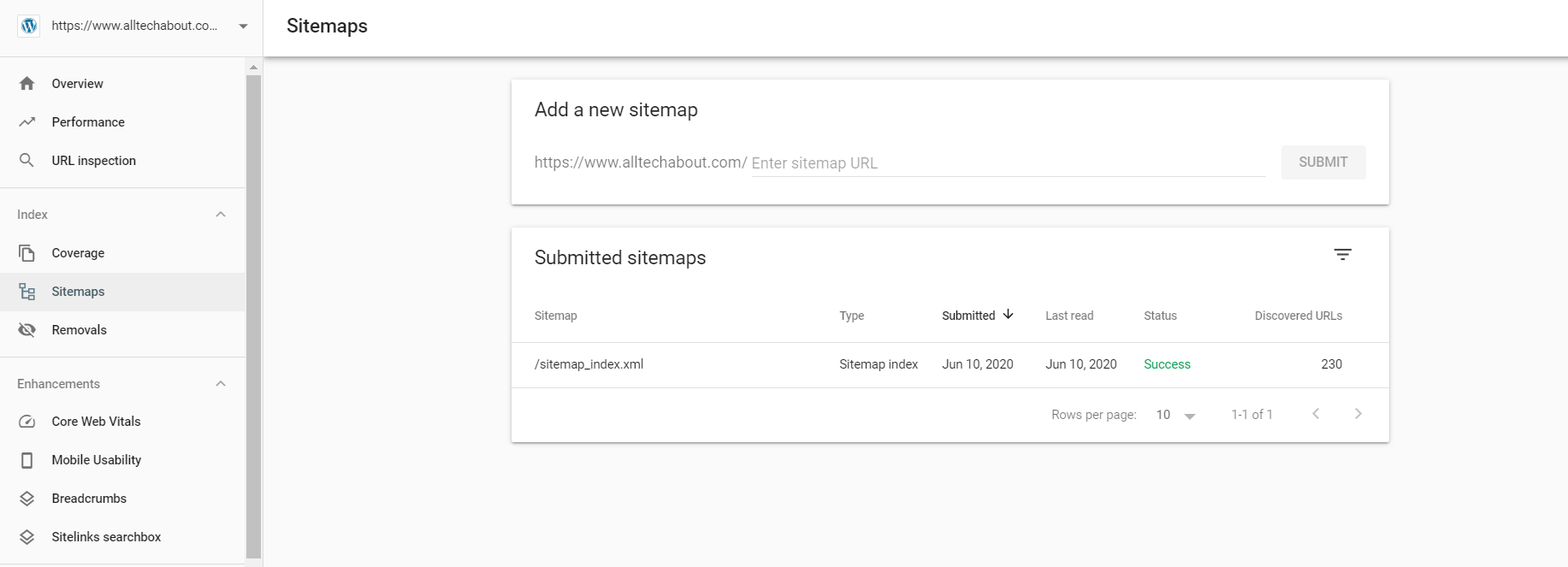

- Login To Google Search Console

- Click on “Sitemaps” on the left panel/Menu

- On add, a sitemap, enter URL of the sitemap you are trying to index

How to Fix The Couldn’t Fetch Google Search Console Error

- Login To Google Search Console

- Click on “Sitemaps” on the left panel/Menu

- Remove Old Sitemap

- On add, a sitemap, enter URL of the sitemap you are trying to index

- Add a Forward slash “/” just after the last forward slash in the URL (See Screenshot)

- Click on Submit

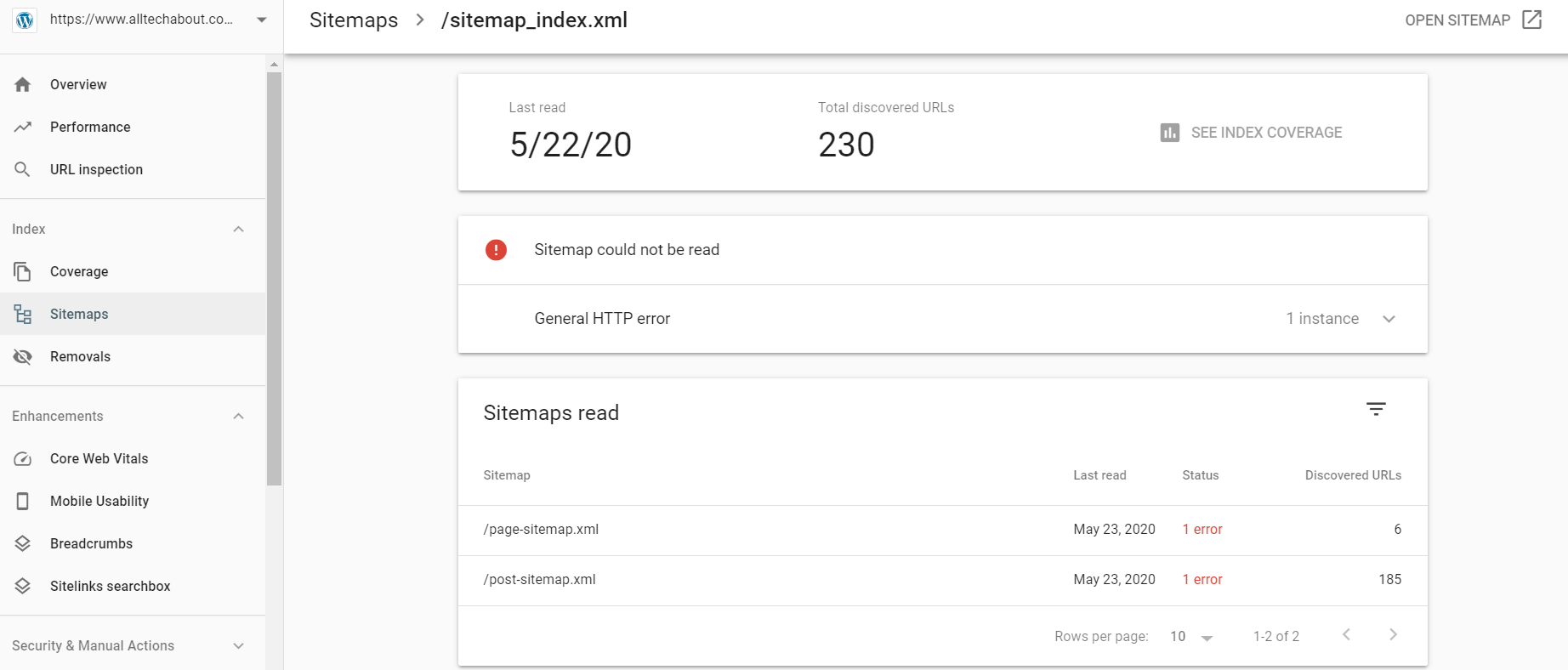

Sitemap could not be read general HTTP error

My sitemap. It’s throwing an error “Sitemap could not be read – General HTTP error“

My sitemap is: https://www.domainmame/sitemap_index.xml

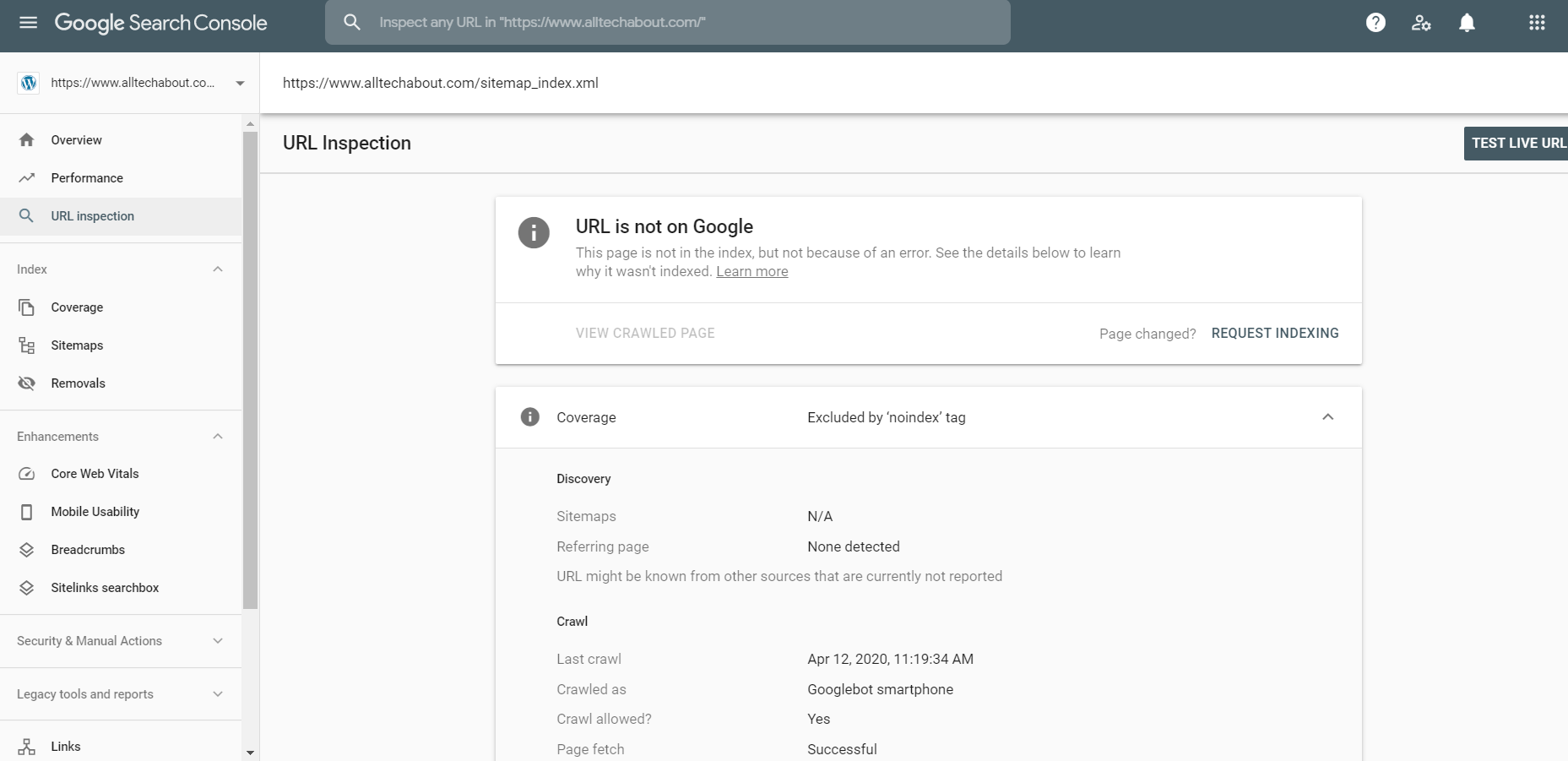

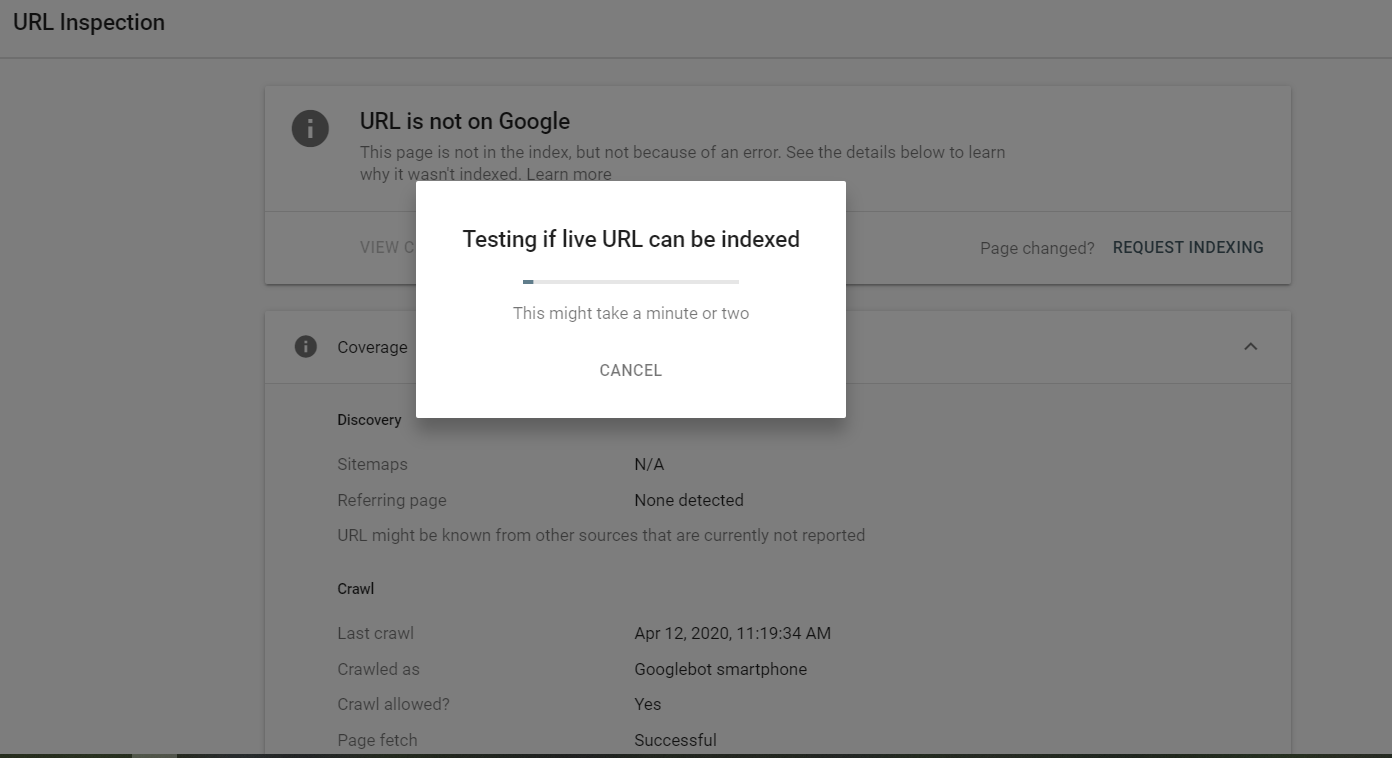

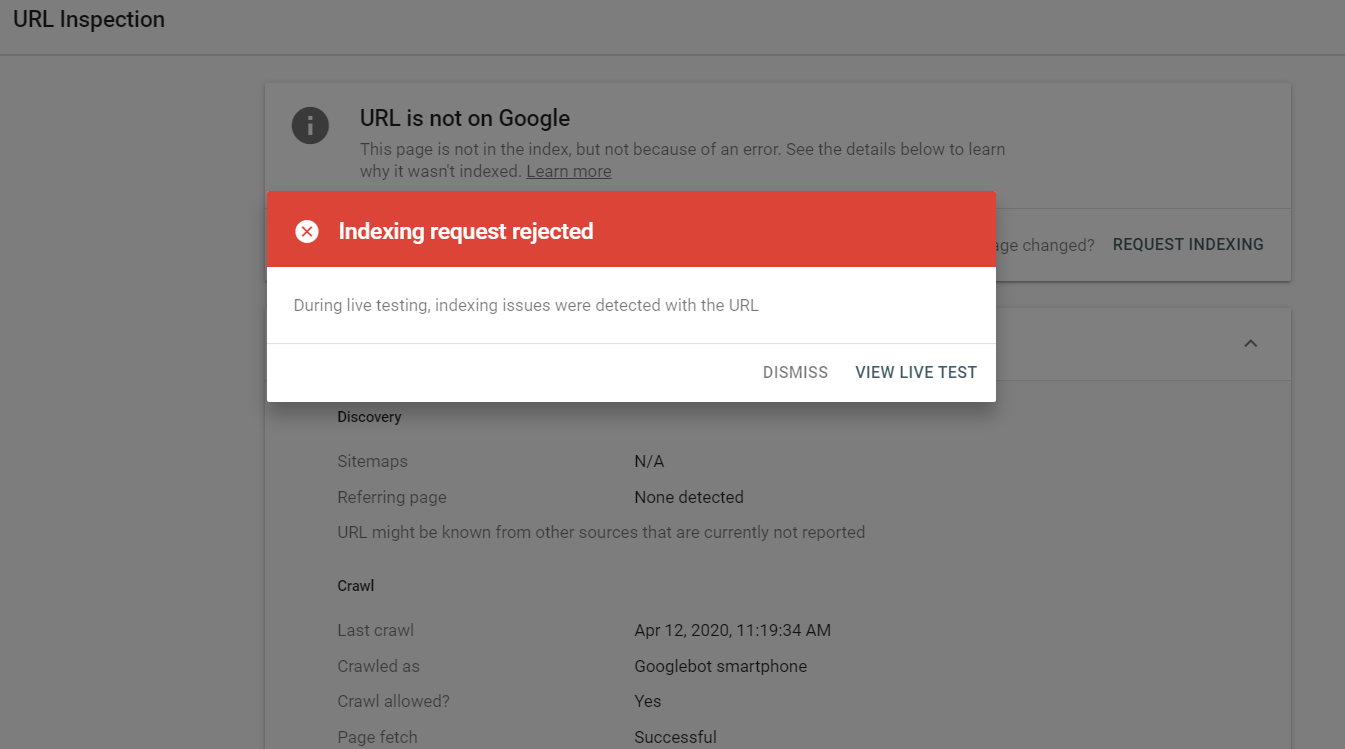

URL Inspection

How to Fix Excluded by noindex tag in Search Console

Block search indexing with ‘noindex’.Google Search by including a noindex meta tag in the page’s HTML code, or by returning a ‘noindex’ header in the HTTP request.

When Googlebot next crawls that page and sees the tag or header, Googlebot will drop that page entirely from Google Search results, regardless of whether other sites link to it.

Implementing noindex

<meta> tag

To prevent most search engine web crawlers from indexing a page on your site, place the following meta tag into the <head> section of your page:

<meta name="robots" content="noindex">

To prevent only Google web crawlers from indexing a page:

<meta name="googlebot" content="noindex">Submit Site Map in Google

Method 1 Fix: “No: ‘noindex’ detected in ‘X-Robots-Tag’ http header”

Check Plugin Update and if use Yoast SEO Go to Tools Option Click on File editor

Now File Editor open and Check now.

Check Which Option robots.txt Allow Or Disallow.

Method 2 Fix: “No: ‘noindex’ detected in ‘X-Robots-Tag’ http header”

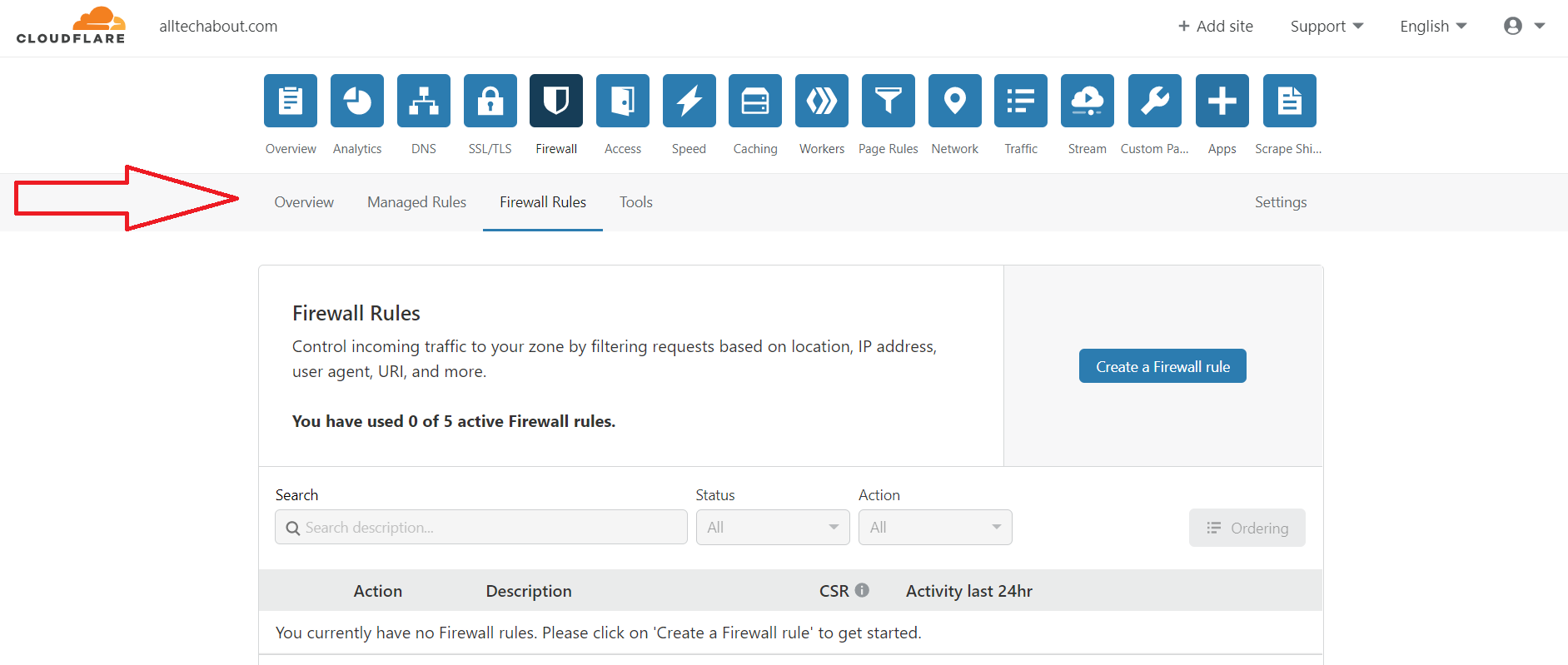

If you use cloudflare open your cloudflare account.

- Click the Firewall tab

- Click the Managed Rules sub-tab

- Scroll to Cloudflare Managed Ruleset section

- Click the Advanced link above the Help

- Change from Description to ID in the modal

- Search for 100035 and check carefully what to disable

- Change the Mode of the chosen rules to Disable

Go to Firewall settings > Managed Rules, and turn off Cloudflare Specials to fix this temporarily until CF has a solution.

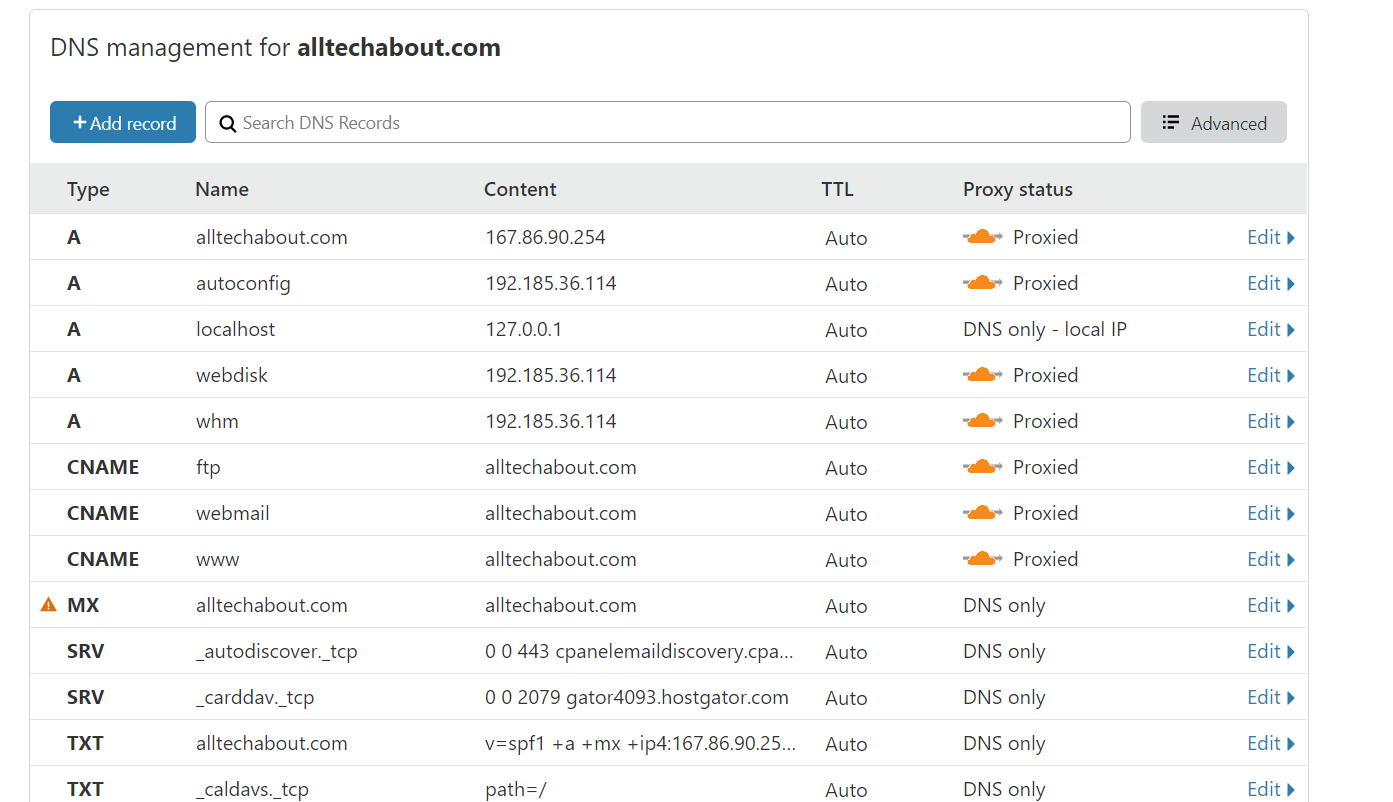

Now Go To DNS Setting in cloudflare.

Click edit and Delete

Now Delete all websites from Cloudflare and change DNS with your real hosting DNS. Now-Again opens Cloudflare and Add new Site and Change DNS.

Save it.

Now go to google console and submit sitemap in google console.it submit sucess.

if you have to face the same issue on your website contact us we help to remove your website error. Share with those who face this problem. Thank you

Conclusion

In the world of search engine optimization, encountering roadblocks just like the “Excluded by way of noindex tag” or “Couldn’t Fetch” errors can be frustrating. However, armed with the information of their reasons and solutions, you are better ready to navigate these demanding situations and make sure your internet site’s surest overall performance in seek engine consequences.